Table of Contents

Introduction

The Docker containers are standardized units that can be created on demand to deploy a defined set of applications for a dedicated environment. It will be used in the pipeline where jobs are executed.

Each job can be executed inside a separate Docker container, which provides an isolated environment for the job to run in. This allows for a more consistent and predictable environment across different projects.

My personal recommendation to you:

You should try to leverage your own Docker environment for most of your GitLab projects.

There are only a few scenarios where using a Docker container doesn’t make sense. A major advantage is that all dependencies and requirements are defined in the Docker image that can be used in further pipeline jobs.

If you would like to use the GitLab Docker registry, one requirement is to configure GitLab with SSL and enable the Docker registry. I have been covering this topic in my previous Blog post, which you can check out here:

GitLab server with a self-signed certificate and embedded Docker registry

When it makes sense to use your own Docker images in GitLab pipelines:

- When you need a clean environment for every job.

- When you need to ensure that the projects run independently from each other.

What to consider:

- The overhead of pulling (downloading the image) a Docker image for every job execution. That’s the reason why I like to use my embedded GitLab registry.

Requirements

In my following example, I am using a GitLab server with 2 GitLab runners (shell and docker executor).

If you want to learn more about GitLab runners and executors, I can recommend checking out the GitLab documentation page:

https://docs.gitlab.com/runner/register/

- The Shell runner is used to build the Docker image and push it to the GitLab server registry

- The Docker runner is used to pull the Docker image and execute the jobs which are defined in the pipeline

In my pipeline, I will use two different Docker images to execute jobs in two for two different use cases. One Docker container will be dedicated to an Ansible job and the second one is used for a Terraform job.

The Ansible Docker container is used to execute a playbook against Cisco DNA Center.

The Terraform Docker container is used to execute an IaC change against Cisco ACI.

Define the Docker files

First, create the following folder structure and files in your GitLab repository (the brackets are indicating if it is a Folder or a File):

docker (Folder)

├── aci (Folder)

│ └── Dockerfile (File)

└── dnac (Folder)

└── Dockerfile (File)

Copy the following content into docker > dnac > Dockerfile:

FROM ubuntu:22.04

RUN apt-get update && \

apt-get install -y gcc python3.11 git && \

apt-get install -y python3-pip ssh && \

pip3 install --upgrade pip && \

pip3 install ansible && \

pip3 install dnacentersdk && \

pip3 install jmespath && \

pip3 install pyats[full] && \

pip3 install ansible-lint && \

ansible-galaxy collection install cisco.dnac

Copy the following content into docker > aci > Dockerfile:

FROM ubuntu:22.04

RUN apt-get update && \

apt-get install -y gnupg software-properties-common curl gcc python3.11 python3-pip git && \

curl -fsSL https://apt.releases.hashicorp.com/gpg | apt-key add - && \

apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main" && \

apt-get update && \

apt-get install terraform && \

pip3 install --upgrade pip && \

pip3 install iac-validate==0.1.2 && \

pip3 install iac-test==0.1.0

Create the pipeline with a Build stage

variables:

IMAGE_NAME_DNAC: $CI_REGISTRY_IMAGE/dnac

IMAGE_TAG_DNAC: "1.0"

IMAGE_NAME_ACI: $CI_REGISTRY_IMAGE/aci

IMAGE_TAG_ACI: "1.0"

stages:

- build

build_image:

stage: build

tags:

- shell-runner

script:

- docker build -t $IMAGE_NAME_DNAC:$IMAGE_TAG_DNAC docker/dnac/.

- docker build -t $IMAGE_NAME_ACI:$IMAGE_TAG_ACI docker/aci/.

push_image:

stage: build

needs:

- build_image

tags:

- shell-runner

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

script:

- docker push $IMAGE_NAME_DNAC:$IMAGE_TAG_DNAC

- docker push $IMAGE_NAME_ACI:$IMAGE_TAG_ACI

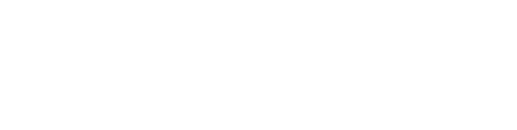

Validate the status of the pipeline:

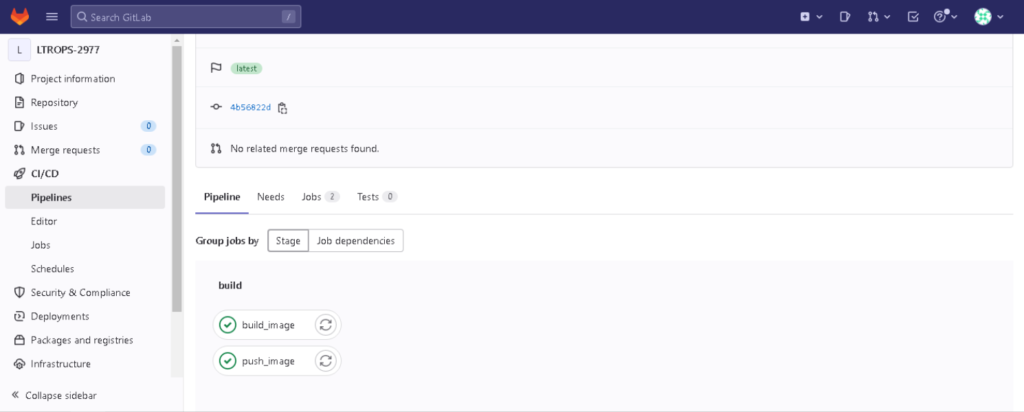

Check if the Docker containers have been successfully pushed to your GitLab registry:

Extend the pipeline with an Execution stage

Now that you know that the Build stage works as expected, it would be good to validate, if the binaries (Ansible / Terraform) are working as well.

For that, it is needed to create another stage in the pipeline which uses the Docker executor and runs a command within the Docker container.

In the following example, the Ansible and Terraform version commands are executed and the output is saved as an Artifact.

What is an Artifact?! 😳

Check out the following link: https://docs.gitlab.com/ee/ci/pipelines/job_artifacts.html

variables:

IMAGE_NAME_DNAC: $CI_REGISTRY_IMAGE/dnac

IMAGE_TAG_DNAC: "1.0"

IMAGE_NAME_ACI: $CI_REGISTRY_IMAGE/aci

IMAGE_TAG_ACI: "1.0"

stages:

- build

- validate

build_image:

stage: build

tags:

- shell-runner

script:

- docker build -t $IMAGE_NAME_DNAC:$IMAGE_TAG_DNAC docker/dnac/.

- docker build -t $IMAGE_NAME_ACI:$IMAGE_TAG_ACI docker/aci/.

push_image:

stage: build

needs:

- build_image

tags:

- shell-runner

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

script:

- docker push $IMAGE_NAME_DNAC:$IMAGE_TAG_DNAC

- docker push $IMAGE_NAME_ACI:$IMAGE_TAG_ACI

validate_dnac_container:

stage: validate

needs:

- push_image

tags:

- docker-runner

image: $IMAGE_NAME_DNAC:$IMAGE_TAG_DNAC

script:

- ansible --version >> dnac_container_output.log

- pip3 list >> dnac_container_output.log

artifacts:

paths:

- "dnac_container_output.log"

when: on_success

expire_in: "1 days"

validate_aci_container:

stage: validate

needs:

- push_image

tags:

- docker-runner

image: $IMAGE_NAME_ACI:$IMAGE_TAG_ACI

script:

- terraform --version >> aci_container_output.log

- pip3 list >> aci_container_output.log

artifacts:

paths:

- "aci_container_output.log"

when: on_success

expire_in: "1 days"

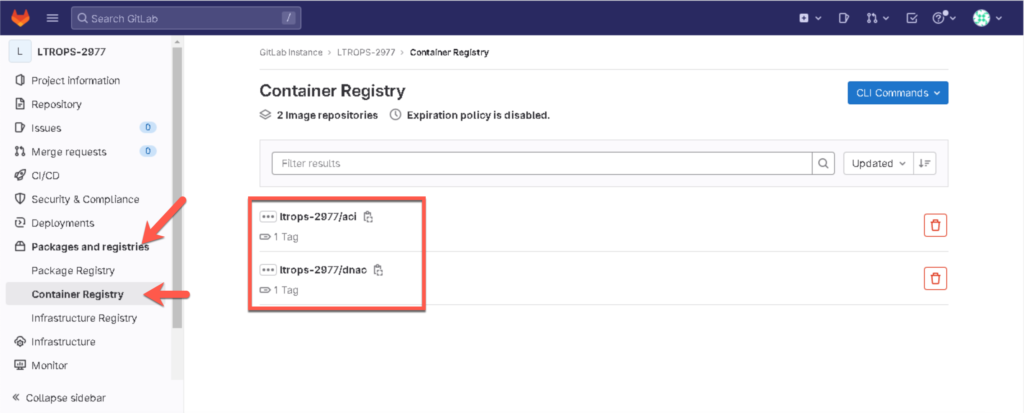

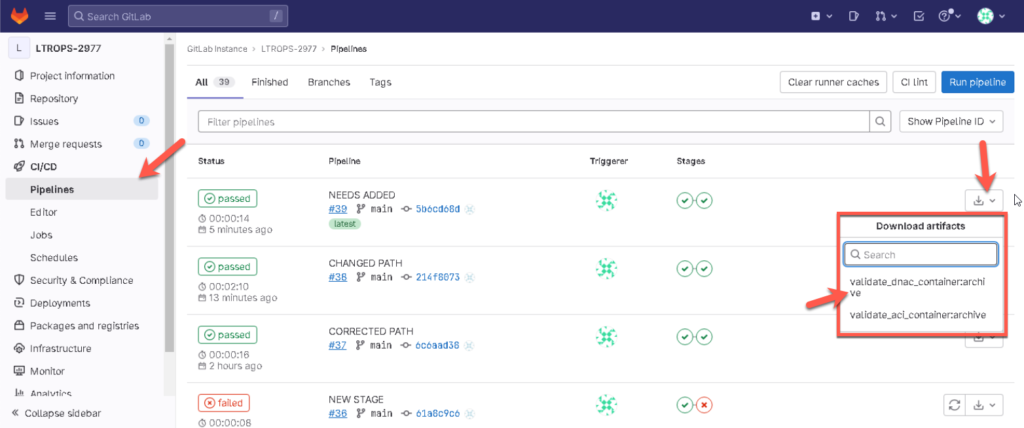

Validate the pipeline execution and check the dependencies which you configured in the pipeline.

Again a new pipeline feature… Yes! A very useful one.

Check out the following link for more: https://docs.gitlab.com/ee/ci/yaml/index.html#needs

Download and validate the job artifacts:

Summary

Let’s summarize what you have learned during this post:

- You learned why it is useful to create your own Docker images #cleanenvironment

- You created two different Docker containers with for different use cases

- You defined a pipeline with a build stage that: Builds and Pushes the Docker containers to our GitLab container registry

- You validated the versions of Ansible and Terraform within your Docker containers by using the GitLab Docker runner